How to (Theoretically) Replace Your Judge with AI

How to (Theoretically) Replace Your Judge with AI

With the onset of increasingly capable artificial intelligence (“AI”), so increases professions wherein humans see their jobs replaced by the more capable computers. A visit to “WillRobotsTakeMyJob.com” lends to some less-than-comforting predictions: accountants and auditors are listed as having a 94% chance of being replaced by AI, occupational therapists: 0%, and—as is the subject of this paper—the site indicates that judges have a 40% chance of being replaced by AI. This article will outline how to make an AI system suitable for judicial replacement and see what kind of threat our autonomous adjudicator actually poses to the job security of our current non-mechanical magistrates.

AI’s Algorithm:

Most modern AI uses “machine learning:” algorithms and statistical models that perform a specific task without using instructions, relying instead on patterns of collected data and inference. Such AI can collect astronomical amounts of data points and, from that data set, autonomously make determinations. This is not so unlike the judge, who looks at historical sets of data (precedent) to determine the appropriate action (a ruling) when faced with novel fact patterns (legal disputes). At first glance, AI appears to be well suited for a judicial positioning: all we would need to do is distill legal application into a formula that can be inputted into a computer and feed data from previous rulings into the computer’s machine learning process: we now have AI’s who can efficiently make determinations of law—more quickly and correctly than humans.

In order to even suggest that legal application can be reduced to a mathematical formula, it must first be appreciated that “the law” and the reasoning that is its predicate follow a certain pattern: a pattern from which we can discern models of expected outcomes. Once we have established what that model of law looks like, we can draw from comparable mathematical models to discern their similarities. If the comparable mathematical model is driven by a formula, and that formula can be inputted into computer systems such as to direct the system, then so too the legal model.

Legal Application’s Pattern:

Consider a law prohibiting driving or parking vehicles in a park. The law’s legal restriction could be memorialized and posted on a sign near the entrance to a park and plainly read “NO VEHICLES IN THE PARK” (the “No Vehicle Sign”). An AI judge could thus implement this law by the formula “if individual is in the park and driving a vehicle, then individual is guilty of violating the NO VEHICLES IN THE PARK rule.” And, since this is a simple law, this formula would correctly enforce the law in every situation, right?

Expanding our hypothetical further, assume a police officer is called to a park bearing the No Vehicle Sign pursuant to reports that someone is driving a vehicle in the park. The officer drives his squad car into the park and finds two other people with vehicles: First is Colton, who is tearing donuts through the park’s garden in a stolen BMW. Second is Bill, who is on his way to repair the park’s gardens in a landscaping truck. Which of these men are in violation of the park law that states, “NO VEHICLES IN THE PARK”?

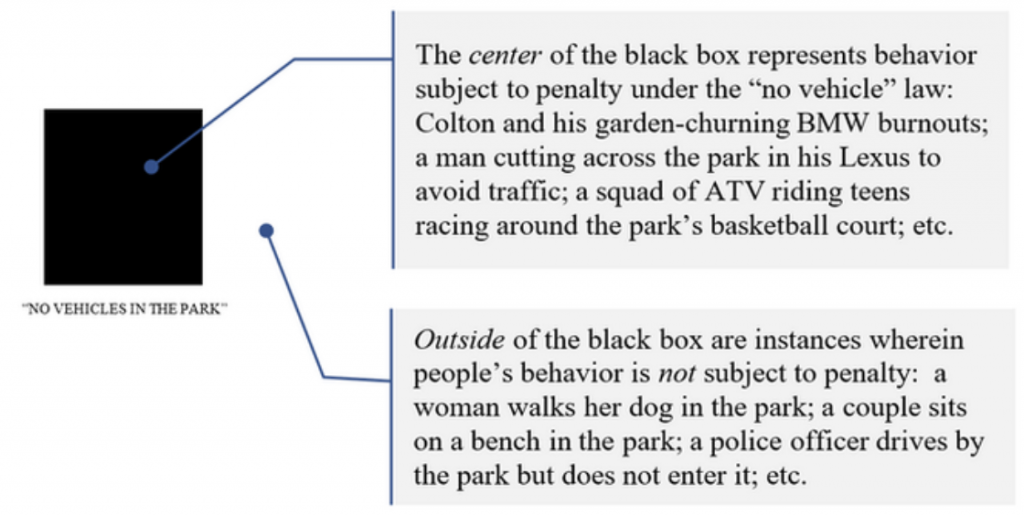

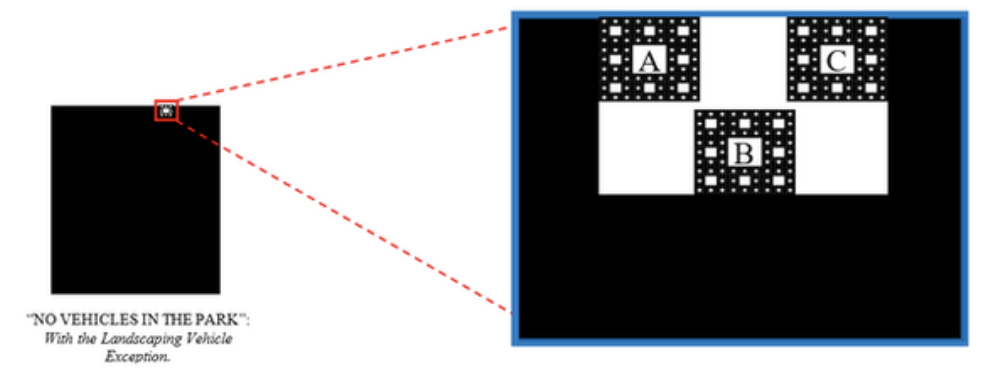

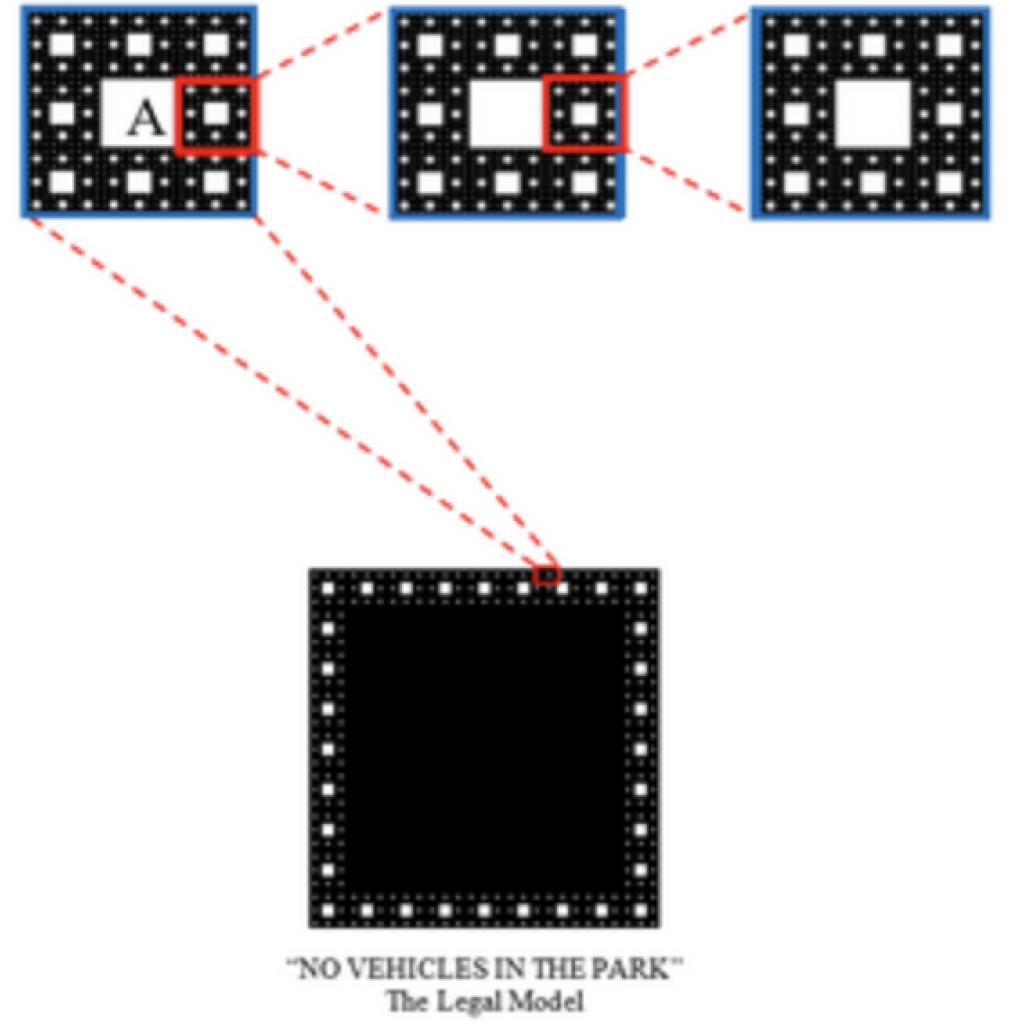

It is almost certain that neither the landscaper-Bill nor the police officer will be found in violation of the park’s no-vehicle rule pursuant to a “presumed exception” to the law that permits landscaper trucks and emergency vehicles to enter the park without subjecting themselves to penalty for No Vehicle Sign violations. To understand the import of the presumed exception to the law for purposes of our model, an initial model of law could be constructed in diagrammatic form. In the model drawn below, behavior subject to penalty for violation of the No Vehicle Sign is represented by the black area. The white area outside the black box represents behaviors that are rightly not subject to penalty for violating the “no-vehicle” rule. This model contains every conceivable behavior; only the behavior subject to penalty for violating the No Vehicle Sign is colored:

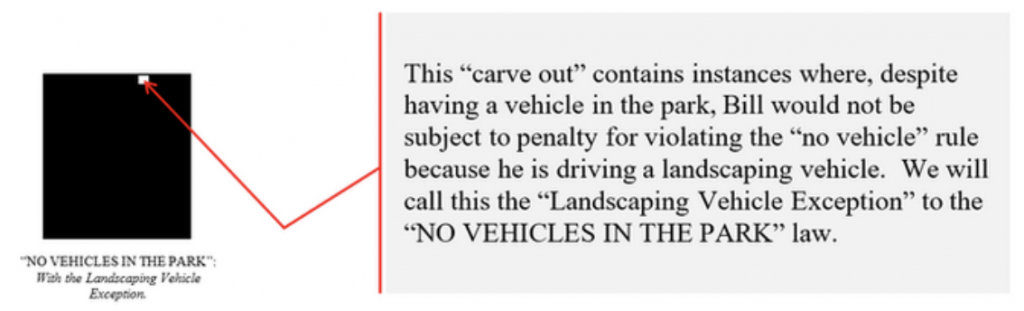

Figuratively speaking and assuming no other laws apply, within the border of the black box are instances only when an individual is (1) in the park and (2) using a vehicle. As shown by the previous example, however, there are times when someone is both (1) in the park and (2) using a vehicle yet still not reasonably considered violative of the “no vehicle” rule pursuant to our presumed exceptions. A presumed exception for landscaping vehicles, in our model, looks something like:

But what if [A] Bill sells his landscaping vehicle to a vagrant who then drives it onto the park? Or if [B] someone buys a landscaping vehicle from a dealer and drives it on the park to do donuts in the garden? Or if [C] Bill takes his landscaping vehicle and drives it around the park in the middle of the night to destroy the garden thereby providing himself with job security? These activities presumably would constitute behavior subjecting the culprit to penalty, and thus, despite falling into the “Landscaping Vehicle Exception,” a black area would nonetheless represent them. The Landscaping Vehicle Exception, then, has its own set of exceptions, which make for “carve-outs” of the “carve-out.”

These exceptions and subsequent exceptions to them are without limit: change a single material fact of an exception and/or its exceptions, and there lies another exception, and so on, “with an infinite number of potentially necessary rule refinements and exceptions, ultimately resulting in an infinitely intricate border that separates the legal from the illegal.” Andrew Stumpff, The Law is a Fractal: The Attempt to Anticipate Everything.

Fractal’s Formula:

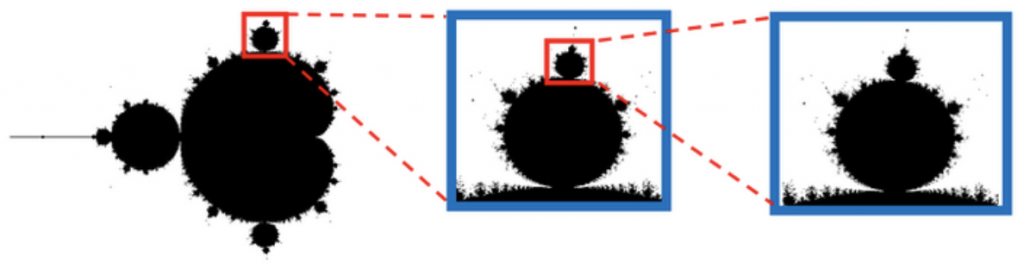

This recursive border complexity is captured by the mathematical construct, the “fractal.” A mathematical fractal is a shape with an infinitely complex border mapped via formula. The first fractal was discovered by Benoit Mandelbrot, who famously mapped the points of the iterative formula “Zn+1 = Zn2 + c.” Upon inputting this iterative formula into a graphing calculator, Dr. Mandelbrot’s computers discovered a shape with an infinitely complex border:

This is exactly the nature of the legal model’s shape: because human behavior is infinite, so is the complexity of the border between legal and illegal. Further, like a fractal, we can reliably determine (for the most part) what behavior is inside or outside of the model; the fringe cases give rise to infinite complexity. Because legal application involves synthesizing historical data so as to draw the line between legal and illegal, and that line is infinitely complex, the model of legal application can only be drawn by way of fractal. Thus, because the diagrammatic representation of a fractal is guided by a formula that can be inputted into a computer, then so too can legal application be captured by a formula similarly capable of being inputted into a computer.

The AI Judge:

It is entirely possible to have an AI-powered computer collect every factual scenario from every legal case to date, apply its machine-learned formula to novel cases, and make determinations in uniformity with its algorithm derived from the data set. In our case, the AI Judge would process thousands (if not millions) of instances wherein a landscaper had been found to have been violative of the “NO VEHICLES IN THE PARK” rule and apply it to Bill—ideally finding his situation sufficiently similar to previous instances wherein the landscaper was found not violating the rule and subsequently issuing an order freeing Bill of culpability. The more data points the AI Judge has (like what time Bill was at the park, what he was doing with the vehicle, etc.), the more accurate its adjudication. But what if there were no recorded cases wherein a landscaper had been charged with violating the No Vehicle Rule? Or the only recorded case involving a landscaper dealt with a landscaper who was, in that specific circumstances, rightly found guilty? In order to appropriately calculate these fringe cases and the presumed exceptions, the AI Judge must be guided by a formula similar to the formula/algorithm created by Dr. Mandelbrot: one that accounts for infinite complexities perforating the border separating legality and illegality—and able to operate without precise precedent.

The Legal Formula:

When faced with a lack of precedent, attorneys are expected to make arguments based on policy. In fact, even with applicable-but-damning precedent, an attorney can successfully overcome otherwise binding precedent pursuant policy considerations when adherence to the former would result in injustice. The law is, in effect, guided by policy: irrespective of precedent, policy can bend law towards justice and account for things like the presumed exceptions discussed above. In the case of the “NO VEHICLES IN THE PARK” rule, the underlying policy might be articulated as one aimed at furthering the patrons’ quiet enjoyment of the park while mitigating behavior that would disturb the park’s neighbors. Thus, if an AI can account for this whether the behavior at issue infringed on patrons’ quiet enjoyment of the park, or disturbed the park’s neighbors, the AI could determine that, irrespective of a lack of applicable historical data, Bill will nevertheless be found not to have violated the rule as his actions furthered the underlying policy. Our AI Judge’s formula is then, “in order to further patrons’ quiet enjoyment of the park and to not disturb the park’s neighbors: if an individual is in the park, and the individual is using a car, then that individual is violating No Vehicles in the Park Rule.” We could then feed the AI Judge every factual scenario involving individuals disturbing parks’ neighbors and/or preventing patrons from enjoying the park quietly, and, when presented with an individual using a car in the park, the AI Judge would cross-check the facts at bar with policy considerations. If it found that the individual’s use of the car in the park furthered the underlying policy, then, despite the individual using a vehicle in the park, the AI Judge would find the individual not violative of the rule.

The Human Intuition:

The problem with an AI judge is that, no matter how many factual scenarios you feed it, it may never be able to account for an unanticipated exception that would otherwise be captured by policy considerations. Since potential human behavior is infinite, a data collection of every human action ever taken is still mathematically closer to 0 data than the infinite amount of data needed to capture all potential human behavior. But how can humans apply policy to infinite situations? Our ability to apply policy is borne of a sort of “moral common sense;” something that, while often taken for granted by humans, can never be accounted for by pure sets of data. Since the AI’s algorithms and formulas are confined by data points, an AI will never break out of the boundaries of making decisions based strictly on “what has happened.” Thus, because the formula of legal application which accounts for the infinite complexities of human behavior is directed—at least in part—by policy, and policy is borne of common sense, an AI adjudicator will almost certainly need some level of human interaction.

AI, in Practice:

AI’s practical application was perhaps best summarized by Thomas Barnett of Paul Hastings at IG3 West 2019 when he stated “the question shouldn’t be how AI can replace the human intellect, but, rather, how can AI supplement human intellect.” Indeed, even the most robust AI systems still require some level of human direction (see, e.g., Google AI’s contract metadata extraction tool that requires that a human confirm the extracted data).

This understanding of AI is, for example, what drove the development of Briefpoint—an AI-backed litigation document generator. Instead of using AI to assume what a litigator will do, Briefpoint asks what the litigator would like to do first, then uses AI to amplify that decision. It will never draft facts in the light most favorable for an attorney’s client, nor come up with creative applications of the law to facts. Instead, it operates at the behest of the attorney to effect time savings via automation. Used in this—perhaps modest—manner, AI is made reliable and thereby suitable for the high threshold of the accuracy demanded by the practice of litigation.